The Prescient Blog

IN THE SPOTLIGHT

Why Drilling Equipment Condition-based Maintenance Needs Operational Context?

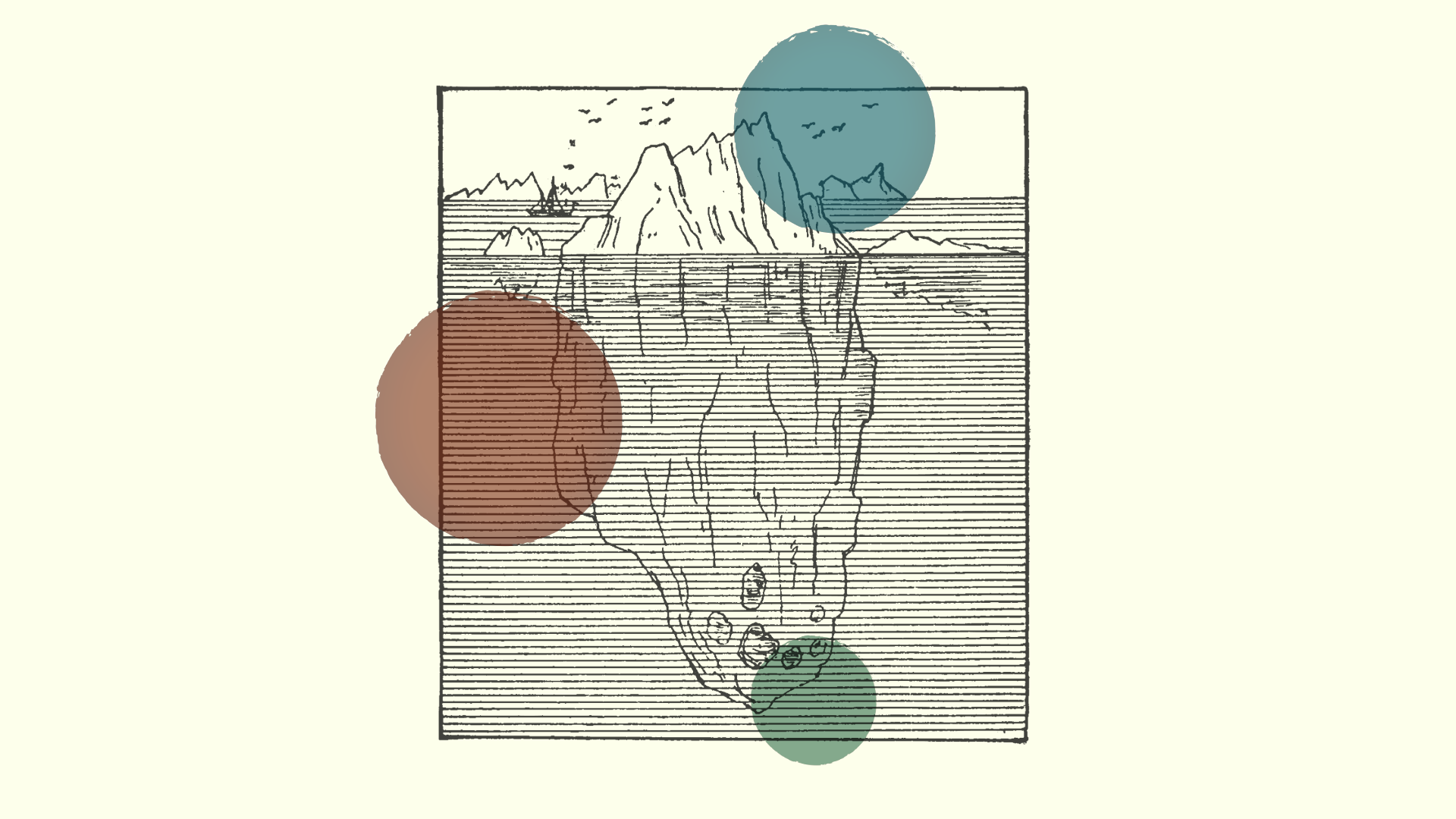

While sensor-based condition-based maintenance (CBM) can work very well for equipment with constant load, such as those used in manufacturing plants, it falls short when it comes to drilling equipment and smart predictive maintenance.

What is AI? A Primer for Subject Matter Experts

We made a primer for subject matter experts to address the basics of what is AI, and how it is deployed in industrial environments, its benefits, limitations and what domain experts should look for when choosing a vendor with AI-assisted technology.

Why did we build a usage-based fatigue model using GenAI technology?

While sensor-based CBM can be effective in detecting potential failures, they do not understand how equipment performs. We want to go beyond sensor-based Condition-based Maintenance (CBM).

Build large-scale data solutions in distributed functional blocks

Big data was on the rise, and the world needed a better way to process data. The default approach was to develop data solutions in software. That method is still the default today, and it comes with its fair share of challenges.

Practicing digital transformation through incremental change

We recognized there exists an opportunity to leverage best practices from other industries in monitoring the health of our assets, enabling a more proactive approach to maintenance.

Why drilling equipment condition-based maintenance needs operational context?

While sensor-based CBM can work very well for equipment with constant load, they fall short when it comes to drilling equipment. Drilling equipment operates with variable load - the workload ramps up and down depending on drilling conditions.

What digital transformation can learn from the automotive industry

We, the consumers, demand consistent, reliable performance in every product or service we utilize regardless of frequency. A car that rarely works is a lemon, but do we have a comparable term for digital products and processes?

How operational digital twins improve capital discipline

Operational digital twins offer real-time insights into asset health and equipment operations, helping businesses improve capital discipline and reduce costs.

What is context-aware condition-based maintenance (CBM) ?

Context-aware CBM enhances traditional maintenance by integrating operational data, improving accuracy, and reducing false failure detections.

Who uses an operational digital twin solution?

Manufacturing and Industrial Companies use digital twins to monitor equipment health, predict failures, and streamline production processes, reducing downtime and maintenance costs.

What are the benefits of an operational digital twin?

Dive into how operational digital twins can transform your asset management by predicting failures and optimizing performance.

What is an asset life model for condition based maintenance

Asset Life Models revolutionize asset management by tracking performance from day one, offering precise failure forecasts, and improving vendor comparisons.

AI-driven root cause analysis and how to apply it

Modern production systems can reap the benefits from AI-driven root cause analysis. AI is very good at figuring out patterns in large, complex data sets.

Which enterprise software licensing model is right for your organization?

Today’s software licensing models have gotten very complicated. We’ll provide a brief explanation to the three main licensing models: rent, buy, and hybrid, to help you determine which licensing model is right for you.

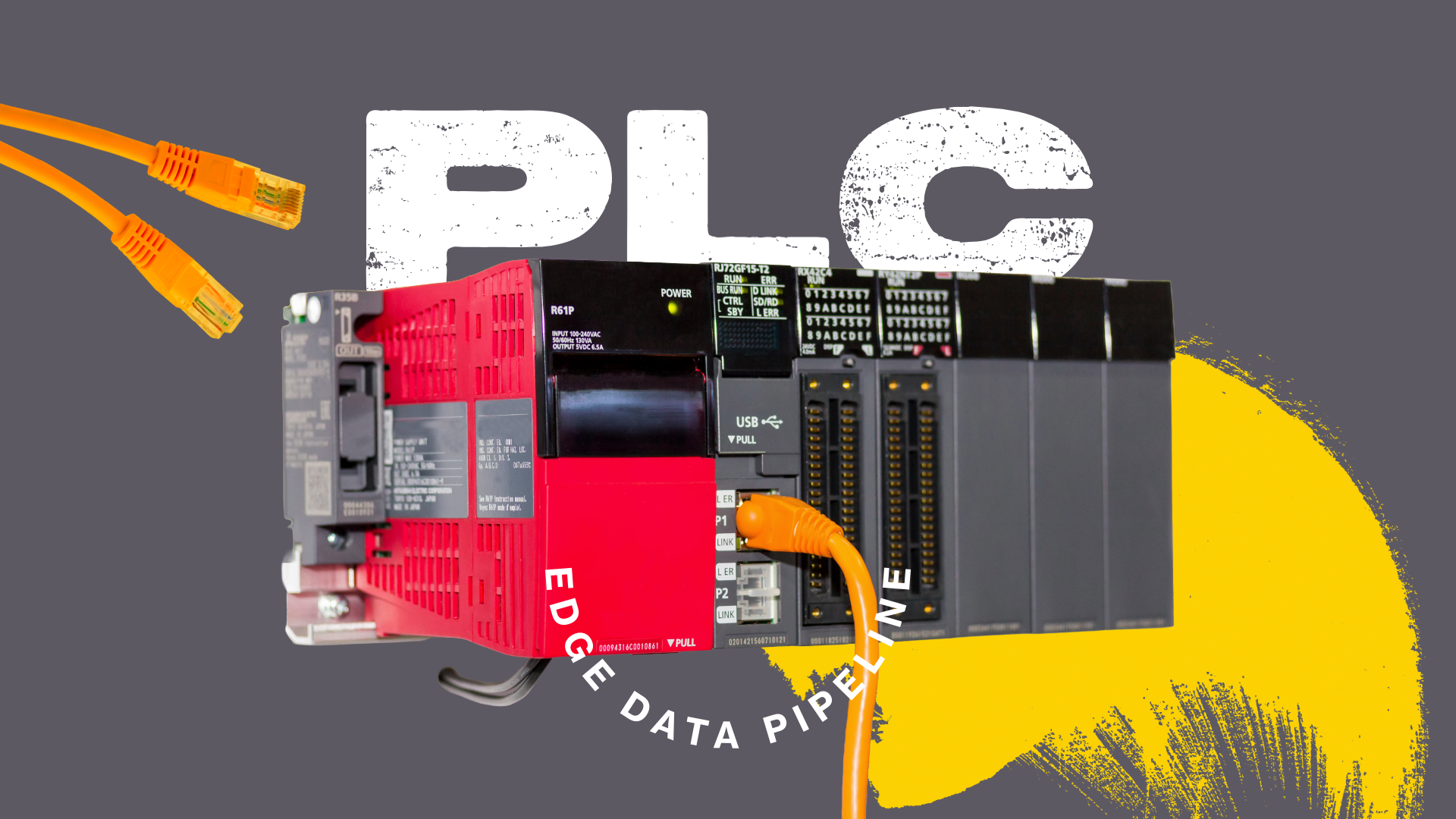

How to build an edge data pipeline for PLCs at the edge

Enhance your PLC operations with an edge data pipeline. Prescient simplifies setup, offering real-time monitoring, dynamic configuration, and seamless integration. Optimize efficiency, reduce downtime, and gain valuable insights.

High-speed blob transfer in Node-RED: Leveraging IO-link sensor data pipeline

Leverage the power of IO-Link for lightning-fast data collection. By configuring your IO-Link Master in blob transfer mode and optimizing Node-RED nodes, you can efficiently handle large volumes of data. This is crucial for industries demanding real-time insights, such as automotive manufacturing and energy.

Why are large-scale industrial data solutions so hard to build?

Industrial data is very disparate. They come from different sources such as equipment, sensors, controllers, and historians. They come from different generations of hardware where each generation can have different data interfaces and data protocols. Each data protocol requires a different data connector, and it is very time-consuming to implement and support these data connectors.

What is an automated IO-Link data pipeline?

Gain a competitive edge in the Industry 4.0 landscape. Automated IO-Link pipelines empower you to automate data collection, gain real-time insights, and make data-driven decisions to optimize efficiency and productivity.

A dynamic, configurable pipeline for PLC data

Don't let your PLC data become a burden. Dynamic pipelines empower you to customize data processing, streamline workflows, and extract maximum value from your industrial data.

6 Ways to improve your oil and gas asset management with operational digital twins

The future of oil and gas asset management is here! This article dives into 6 essential digital twin strategies to streamline equipment monitoring, enable predictive maintenance, prioritize safety, and optimize efficiency across your operations.

How to format raw sensor data into JSON objects using Node-RED

Raw sensor data holds immense potential, but it needs the right tools for analysis. This article explores how Node-RED and JSON can be your secret weapon, transforming raw data into a format ready for further processing, empowering you to build robust and insightful edge data applications based on sensor data.